Could a chatbot help ease depression symptoms by 51%? Research suggests it’s possible. As mental health challenges grow, Generative AI Therapy for Depression Relief: 2025 Insights offers a promising solution for 2025, per a Dartmouth study. This post explores how AI therapy, like Therabot, could transform depression relief, its benefits, and its challenges. From RSA Conference 2025 to Quadratic AI, AI is reshaping lives. Let’s dive in!

Table of Contents

AI Therapy: A New Hope for Depression

What Is Generative AI Therapy?

How Therabot Works

Dartmouth’s Breakthrough Study

Key Findings

Benefits of AI Therapy

Accessibility

Scalability

Consistency

Challenges and Risks

Safety Concerns

Human Nuance

Ethical Issues

Frequently Asked Questions

Conclusion: Embracing AI’s Potential

AI Therapy: A New Hope for Depression

What Is Generative AI Therapy?

Generative AI creates human-like content using advanced models. In therapy, it powers chatbots like Therabot, simulating conversations to support mental health, per MIT Technology Review. Unlike scripted bots, these adapt in real-time, offering personalized responses for depression, anxiety, or eating disorders. This aligns with AI innovations like Claude 3.5.

How Therabot Works

Therabot engages users via a smartphone app, responding to typed prompts or open-ended chats. It uses natural language processing to tailor support, calming anxious users or uplifting those with depression. Its flexibility makes it a game-changer, per Technology Networks.

Dartmouth’s Breakthrough Study

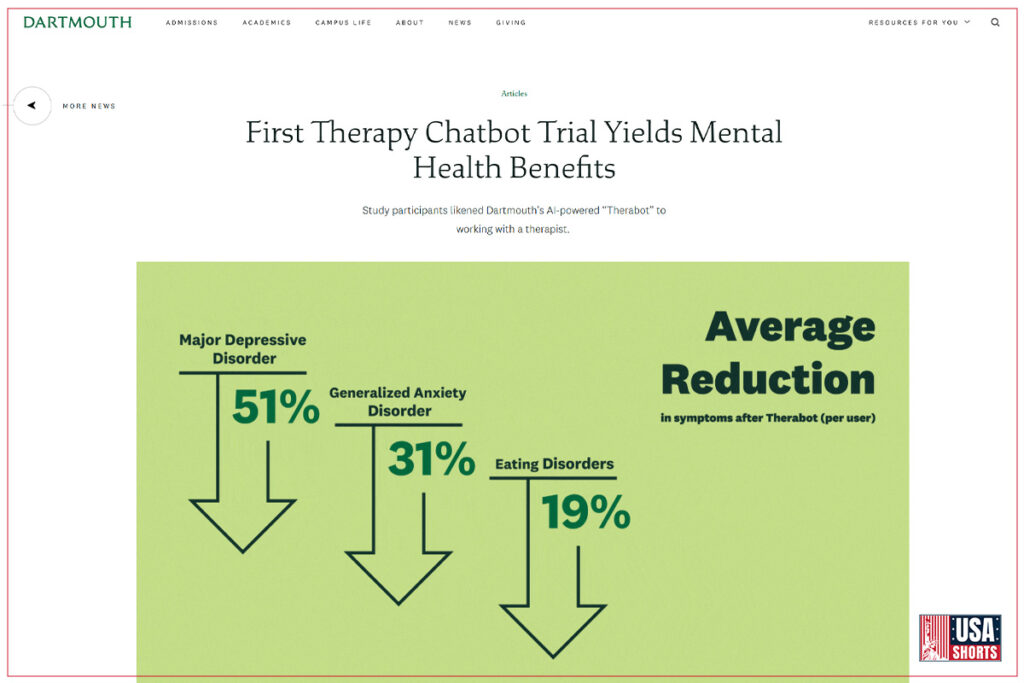

In March 2025, Dartmouth’s Therabot trial marked a milestone in AI therapy. The study, published in NEJM AI, involved 106 U.S. participants with depression, anxiety, or eating disorders, interacting with Therabot over weeks.

Key Findings

- Depression: 51% symptom reduction, clinically significant.

- Anxiety: 31% reduction, improving daily functioning.

- Eating Disorders: 19% less body image concern.

- Participants trusted Therabot comparably to human therapists, per New York Times.

This complements tools like Generative AI as a Therapist, but researchers stress human oversight is vital.

Table: Dartmouth Therabot Study Results

| Condition | Symptom Reduction | Impact |

|---|---|---|

| Depression | 51% | Improved mood, well-being |

| Anxiety | 31% | Reduced daily stress |

| Eating Disorders | 19% | Better body image |

Benefits of AI Therapy

AI therapy offers transformative advantages, per Wellcome.

Accessibility

AI reaches underserved areas, unlike traditional therapy, supporting users anytime, anywhere, as seen in No More Language Barriers.

Scalability

Chatbots handle multiple users, addressing therapist shortages, unlike Copilot Studio.

Consistency

Available 24/7, AI ensures steady support, complementing ChatGPT AI Images.

Challenges and Risks

AI therapy isn’t flawless, per PMC.

Safety Concerns

Bots may misread severe symptoms (e.g., suicidal thoughts), requiring human oversight, unlike Microsoft’s AI Security.

Human Nuance

AI lacks deep empathy, potentially alienating users, per New York Times.

Ethical Issues

Privacy, bias, and consent issues persist, needing regulation, as in Apple AI Privacy.